What Is a Load Balancer: A Comprehensive Guide

Introduction

Dealing with an overloaded server presents a choice: either increase its capacity or distribute the workload. Scaling up by adding more resources to the server can improve performance, but there is a limit to how much it can handle. Conversely, spreading workloads across multiple servers offers nearly limitless scalability and better availability.

The essence of load balancing lies in the distribution of workloads to improve efficiency. This guide offers insights into load balancing and potential advantages for your workloads. It also explores various approaches and algorithms to best align with your requirements.

What is Load Balancing?

Load balancing is the practice of evenly distributing client requests across multiple servers. Initially, load balancers were dedicated hardware devices in front of physical servers in data centers. Today, cloud-based servers can leverage software solutions like Akamai NodeBalancers to fulfill the same function.

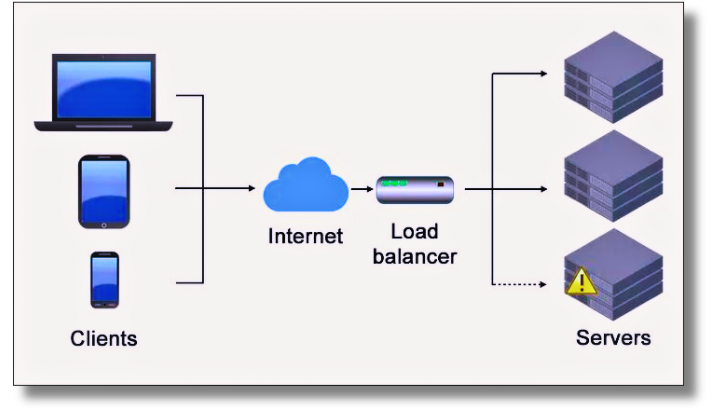

In essence, load balancers facilitate the real-time management of incoming client requests, evaluating which backend servers are optimally equipped to handle them. To ensure that no single server is overwhelmed, the load balancer intelligently directs requests to various available servers, whether they are on-premises, in server farms, or hosted in cloud environments.

Load balancers exhibit a range of complexities. The most basic versions simply pass client requests along in the order they are received. On the other hand, more advanced load balancers track server conditions to determine which server can respond most effectively at any particular moment. Additionally, certain models are capable of functioning across multiple data centers, distributing workloads internationally to optimize response times.

Types of Load Balancers

While the basic purpose of any load balancer is to distribute traffic, several types of load balancers exist which serve specific functions, such as:

Network Load Balancers (Layer 4 Load Balancers): Network load balancers play a crucial role in improving network efficiency and decreasing latency on local and wide area networks. By leveraging network details such as IP addresses, destination ports, and TCP/UDP protocols, they effectively manage network traffic to deliver sufficient throughput and meet user expectations.

Application Load Balancers (Layer 7 Load Balancers): These load balancers utilize application content, including URLs, SSL sessions, and HTTP headers, to direct API request traffic. Through the analysis of application-level content, it is possible to identify redundant functions present on various application servers and ascertain which servers are capable of fulfilling specific requests promptly and consistently.

Virtual Load Balancers: With the evolution of virtualization and VMware technology, virtual load balancers have become essential for optimizing traffic distribution among servers, virtual machines, and containers. Open-source container orchestration tools such as Kubernetes provide virtual load-balancing functionalities to efficiently direct requests between nodes within a container cluster.

Cloud-based Load Balancers: Cloud-native load balancers do more than just handle traffic fluctuations and server performance. They also provide predictive analytics to identify potential traffic bottlenecks ahead of time, offering actionable insights for companies to improve their IT infrastructure.

DNS Load Balancing: DNS Load Balancing is the method of arranging a domain in the Domain Name System (DNS) to allocate user requests among a set of server machines.

HTTP(S) Load Balancing: HTTP(s) load balancing is the process of distributing traffic across multiple web or application server groups to optimize resource utilization.

Internal Load Balancing: Internal load balancing is designated to a private subnet and does not possess a public IP. It generally operates within a server farm.

Diameter Load Balancing: Diameter load balancing distributes signaling traffic across multiple servers in a network. Scaling the diameter control plane is a cost-effective approach for this, rather than the data transport layer. (Diameter load balancing can also be static or dynamic.)

Global Server Load Balancing: Global server load balancing is responsible for directing traffic to servers located across multiple geographic regions to guarantee the availability of applications. User requests are intelligently assigned to the closest server, and in the event of a server failure, rerouted to another location with an operational server. This failover functionality plays a key role in disaster recovery planning.

Benefits of Load Balancing

Availability: Before routing requests to servers, load balancers perform health checks to ensure their proper functioning. If a server is on the brink of failure, offline for maintenance, or undergoing upgrades, the load balancer will automatically reroute the workload to a working server to prevent service disruptions and maintain high availability.

It is essential to eliminate any single point of failure in the load balancer. To achieve this, deploying a load balancer with high availability (HA) capabilities is a common approach. This configuration ensures redundancy, as the load balancer is mirrored on at least two different machines. Should the primary machine experience an outage, traffic will be automatically rerouted to an alternative machine that has the same load balancer setup. In high-stakes situations, a global server load balancer can also redirect requests to a standby data center if the primary data centers are not reachable.

Scalability: The purpose of load balancing is to ensure that server capacity can accommodate client demand, especially in a cloud setup. Temporary boosts in server capacity may be needed for sudden flash-mob events. Meanwhile, ongoing traffic growth calls for regular expansions in your pool of cloud servers. Regardless, load balancers evenly distribute client requests to maintain a balance between capacity and demand.

Security: Load balancers can be equipped with security features such as SSL encryption, web application firewalls (WAF), and multi-factor authentication (MFA). Furthermore, they can be integrated into application delivery controllers (ADC) to bolster application security. Through secure routing or offloading of network traffic, load balancing serves as a critical defense against security threats like distributed denial-of-service (DDoS) attacks.

Managing Incoming Traffic: Load balancers play a crucial role in processing and evaluating client traffic, enabling them to make decisions regarding the handling of such traffic. For example, instead of uniformly distributing client requests to all servers, you may decide to direct video content requests to a dedicated server group, while routing all other requests to different servers. This also provides a security advantage, as load balancers can filter out unwanted traffic, thereby alleviating the load on your servers.

How does Load Balancers Work?

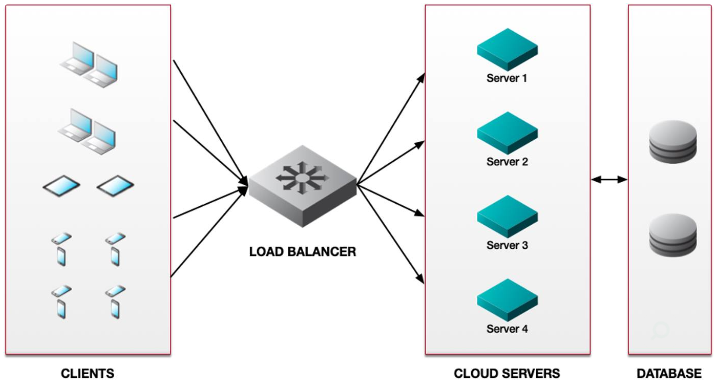

Load balancers also referred to as "middleboxes", play the role of reverse proxies by facilitating communication between clients and servers. A typical setup involves configuring DNS to map www.example.com to the client-facing IP address/es of the load balancer. When individuals wish to view the content on this website, they will submit HTTP/HTTPS GET requests to the load balancer. As a reverse proxy, the load balancer will then allocate these requests among the back-end servers or server groups. The diagram depicted below offers a straightforward illustration of how a load balancer distributes client requests among web servers. Furthermore, the diagram showcases an additional tier of database servers positioned behind the web servers. This configuration is frequently selected to uphold consistent content delivery across multiple servers. This is also an example of Static Loading Balancing.

When a client request reaches the load balancer, it must determine the optimal method for distributing requests among servers. Load balancing algorithms can be classified as static or dynamic, each offering different approaches to enhancing server efficiency.

Static load balancing utilizes algorithms to gauge the incoming server load based on the performance capacity data of the servers in the network, while dynamic load balancing can dynamically redistribute the load in real-time, making it suitable for systems with high fluctuations in incoming load.

It is common for companies to experience fluctuations in traffic, some of which can be foreseen while others cannot. Implementing dynamic load balancing can be advantageous for such organizations. For instance, a healthcare company offering online vaccine appointments, a government agency handling weekly unemployment claims, or a relief organization responding to a natural disaster. By utilizing a Dynamic Load Balancing algorithm, these companies can ensure uninterrupted access to apps and resources during peak demand periods.

Common Types of Load Balancing Algorithms:

Round robin: This method evenly distributes traffic among a list of servers by rotating through them using the Domain Name System (DNS). (Please note that DNS load balancing can also provide a dynamic solution.)

Threshold: Tasks are allocated based on a predetermined threshold value set by the administrator.

Random with two choices: The "power of two" approach randomly selects two servers and directs the request to the one chosen, utilizing either the Least Connections algorithm or the Least Time algorithm, depending on the configuration.

Least connections: A new request is directed to the server with the fewest active connections to clients. The computing capacity of each server is taken into account to determine which server has the least connections or is utilizing the least amount of resources.

Least time: This method sends a request to the server chosen through a formula that considers both the fastest response time and the fewest active connections.

URL hash: This technique generates a hash value based on the URL in client requests, forwarding requests to servers based on this hash value. The load balancer stores the hashed URL value, allowing subsequent requests with the same URL to be directed to the same server.

Source IP hash: This method uses the client's source and destination IP addresses to create a unique hash key linking the client to a specific server. If the session disconnects, the key can be regenerated, ensuring reconnection requests are directed to the same server.

Consistent hashing: Clients and servers are mapped onto a ring structure, with each server assigned multiple points on the ring based on its capacity. When a client request is received, it is hashed to a point on the ring and dynamically routed clockwise to the next available server.

Conclusion

Load balancing enables near-limitless scalability to handle increasing traffic volumes. It enhances availability, whether within a single data center or across multiple data centers globally. The key to optimizing performance lies in selecting the appropriate type of load balancing and algorithms for your applications and services. Proper implementation of load balancing can effectively eliminate server bottlenecks and downtime, while enhancing the speed of traffic to clients.